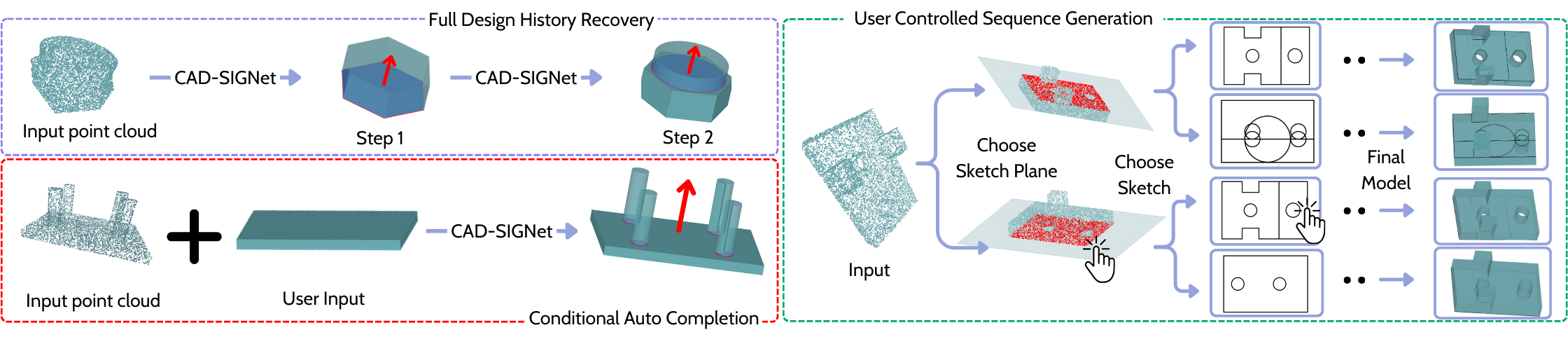

Figure: Full design history recovery from an input point cloud (top-left) and CAD-SIGNet - user interaction (bottom-left and right)

We propose CAD-SIGNet, an end-to-end trainable and auto-regressive architecture to recover the design history of a CAD model represented as a sequence of sketch-and-extrusion from an input point cloud. Our model learns visual-language representations by layer-wise cross-attention between point cloud and CAD language embedding. In particular, our main contributions are

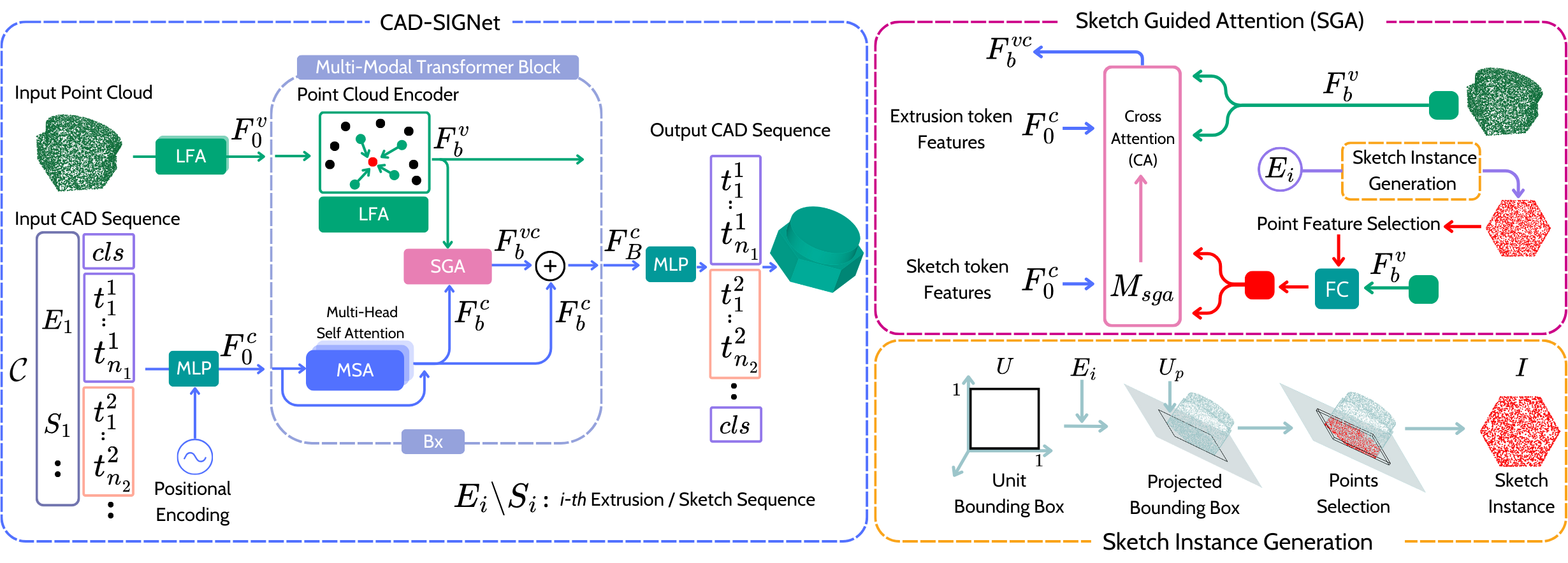

Figure: Method Overview. CAD-SIGNet (left) is composed of \(\mathbf B\) Multi-Modal Transformer blocks, each consisting of an \(\operatorname{LFA} \) module to extract point features, \(\mathbf F_{b}^v\), and an \(\operatorname{MSA} \) module for token features, \(\mathbf F_{b}^c\). An SGA module (top right) combines \(\mathbf F_{b}^v\) and \(\mathbf F_{b}^c\) for CAD visual-language learning. A sketch instance (bottom right), \(\mathbf I\), obtained from the predicted extrusion tokens is used to apply a mask, \(\mathbf M_{\text{sga}}\) during the cross-attention in SGA module (top-right) to predict sketch tokens.

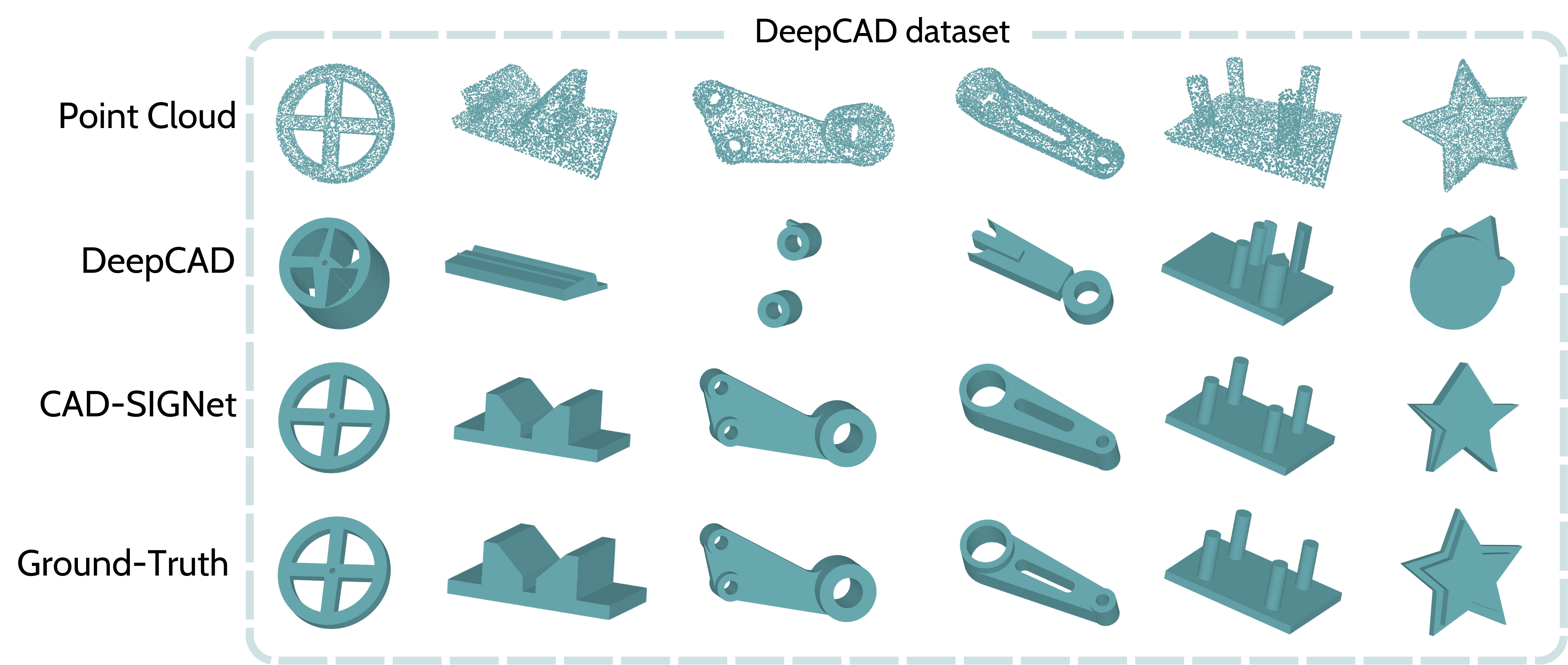

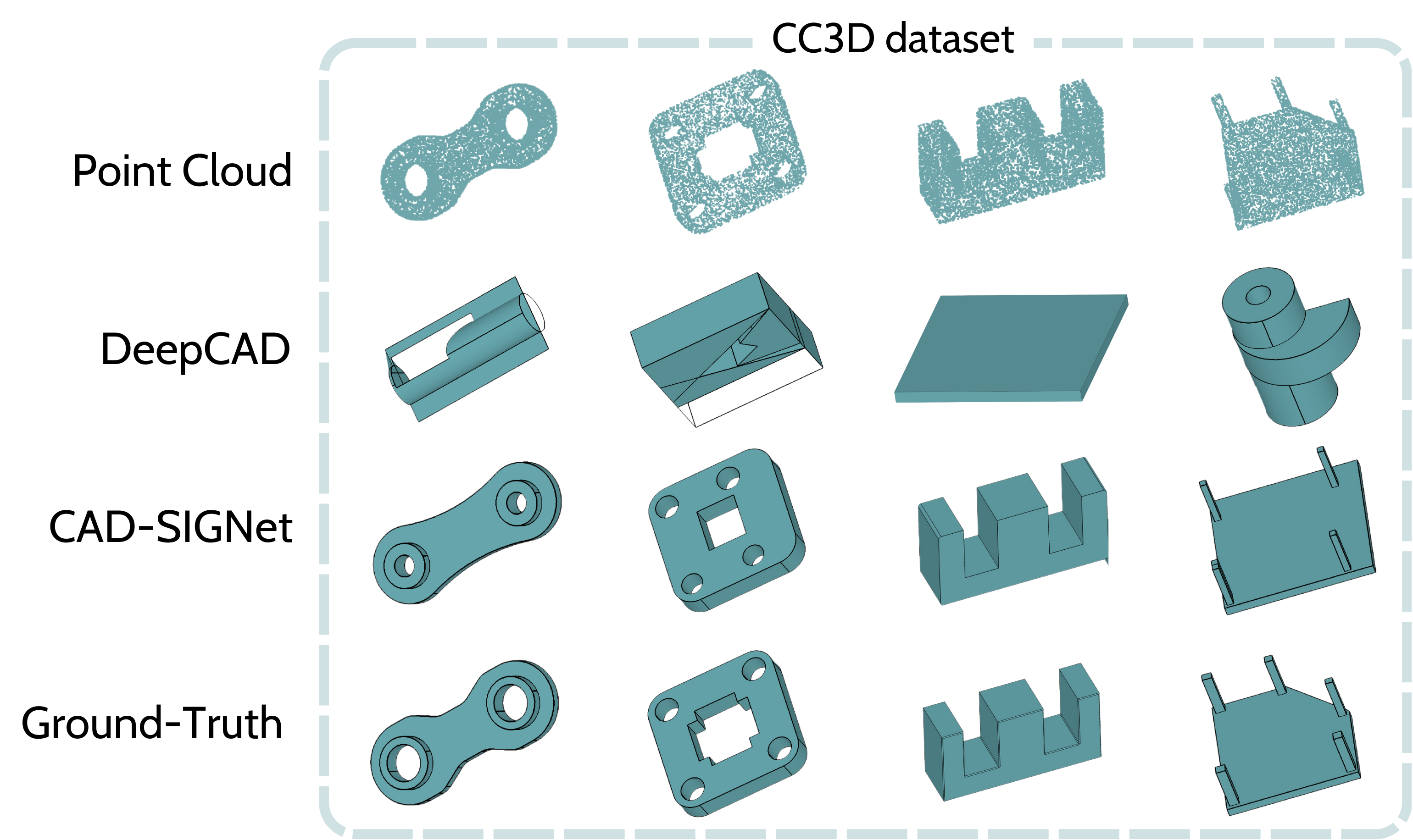

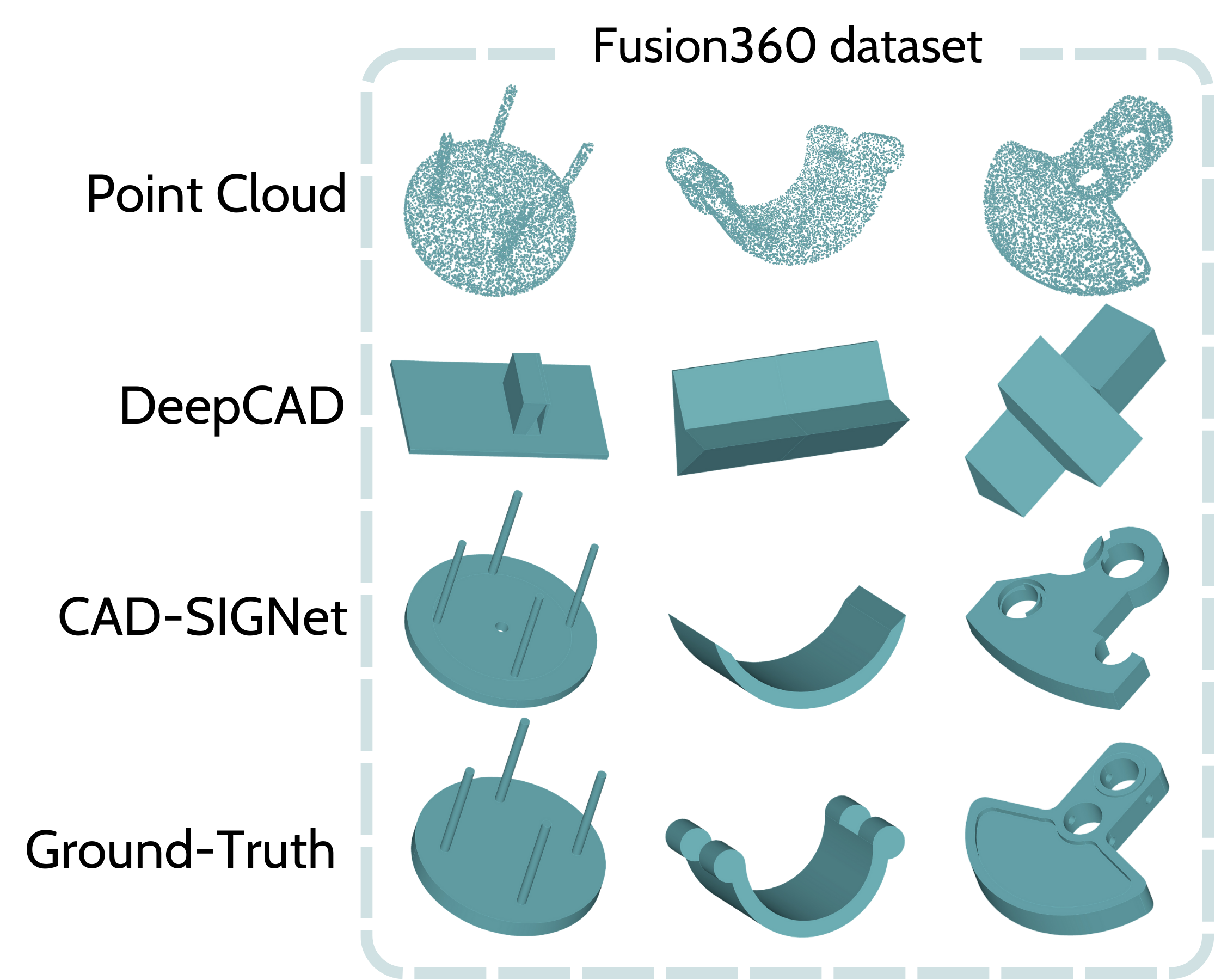

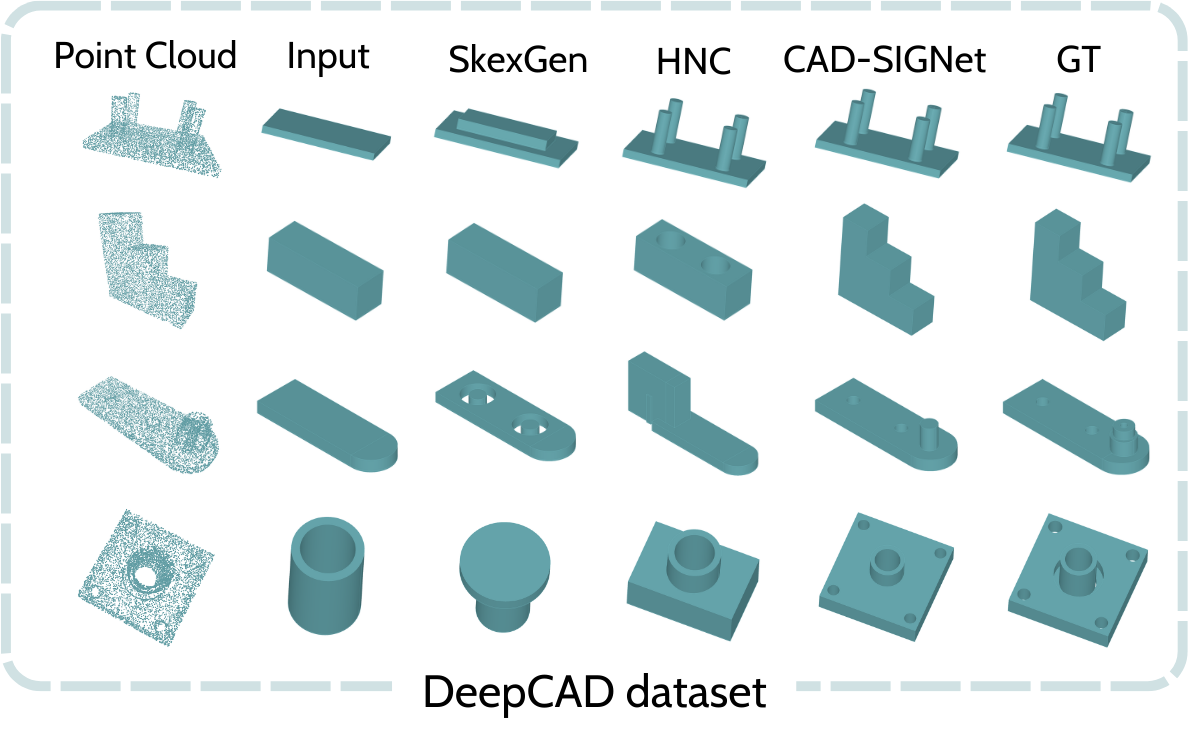

We evaluated CAD-SIGNet on two reverse engineering scenarios -

For scenario (1) DeepCAD is used as baseline. For Scenario (2), SkexGen and HNC have been used. Click on the button below for visual results.

The present project is supported by the National Research Fund, Luxembourg under the

BRIDGES2021/IS/16849599/FREE-3D,

IF/17052459/CASCADES and Artec3D.

If you like our work, please cite.